Cloud-Native Application 101

Going to the Cloud is not only a new way to operate infrastructure, it is also a new way to design applications based on capabilities that an on-demand service can provide.

This topic provides quick and easy steps toward a resilient infrastructure.

General Information

High Availability

Downtime

In a year, how long can you afford to have your service down? Today the "five nines" is the target for major web players. To reach that target, a flexible and resilient infrastructure is mandatory.

| Rate | Time per year |

|---|---|

99% |

3.65 days |

99.9% |

8.76 hours |

99.99% |

52.56 minutes |

99.999% (five nines) |

5.26 minutes |

99.9999% |

31.5 seconds |

Performance

We consider that a website must have a response time of 5 seconds maximum, and aim for an average response time of 2 to 3 seconds. Load balancing provides an easy way to achieve this, without compromising performance regardless of website sessions.

However, multiplying layers and services can slow down the overall user experience. For that reason, you should consider designing your solution with asynchronous communication mechanisms that rely on communication bus with AMQP (see RabbitMQ or Apache Kafka).

Redundancy

In order to guarantee your customers maximum availability whatever happens in a physical datacenter, you can benefit from our Regions and Subregions. For more information, see About Regions and Subregions.

What to Leverage

Software Architecture

After designing your infrastructure, you can improve or design a new application according to rules that enable you to reach the state of the art of a clustered application. You can also design your software to be fault tolerant. For more information, see Netflix Chaos Monkey.

Architecture

Philosophy

The main thing to do is to install each service or application on a single virtual machine (VM) and create an OUTSCALE machine image (OMI) from it. This enables you to easily replicate a VM and deploy it several times. For more information, see Creating an OMI.

A single service needs to be provided by many VMs (see SPOF). A VM with a service is called a "node", and the collection of "nodes" providing a service is called a "cluster". For more information, see Computer Cluster Wikipedia.

Each cluster contains a load balancer to which traffic is routed, instead of directly to a VM.

Best Practices

-

Databases and load balancers do not work the same way. We recommend that you deploy your database services in cluster mode, such as Percona engine for Mysql.

-

Each critical service or each job should run on a single VM, such as a 3-tier pattern.

-

Apart from databases, we recommend that you make your services stateless to improve your cluster’s ability to have nodes that fail without service interruption for your users.

-

Consider designing your software solution with asynchronous communication mechanism.

-

Your infrastructure must grow in out scale mode, not up scale, that is, you need to add nodes when overloading instead of resizing a single node.

Databases

|

Never hot snapshot the volume of a database. Because of continuous I/O, this can lead to fatal integrity issues with your data. |

The best way to save a database is to log all transactions, which enables you to "re-play" transactions and maintain your database up-to-date.

Databases are not designed to be placed behind a load balancer. However, most of them let you configure them as a clusters. For more information about setting up your database, see your database documentation, Eventual consistency, and CAP theorem

Communication Buses

Like databases, a communication bus (rabbitMQ, Kafka) enables you to configure them as clusters, instead of placing them behind load balancers.

Self-Healing and Reliability

We highly recommend using supervision tools to be able to terminate or run new nodes when one of them encounters a problem.

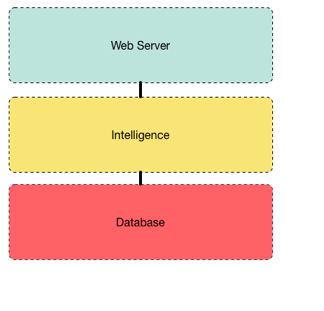

Software Stack Organization

Split your services across a 3-tier website with a Frontal (nginx), an Intelligence with your code (python/php/ruby/…) and a Database. Each service listed below must be on a single VM:

For each VM where a service is installed, create an OMI that you can later use to multiple nodes.

Related Pages